Cultural and Creative sectors occupy a significant place in today’s European economy, by contributing to innovation, investment, digital modernisation and cultural tourism. The Cultural and Creative Industries (CCIs) generate indeed around €509 billion per year, representing 5.3% of the EU’s total GDP and employ 12 million full-time jobs, which constitutes 7.5% of the EU’s employment and the third largest employer sector in the EU (European Commission, 2018). More than the economic value added to the EU’s GDP, cultural and creative sectors promote the European culture within and beyond the EU’s borders. In a political context characterised by the questioning of the European project, cultural and creative sectors have the potential to strengthen the European identities, cultural diversity and values; favour the critical thinking and build bridge between art, culture, business and technology in order to bring the European citizens closer.

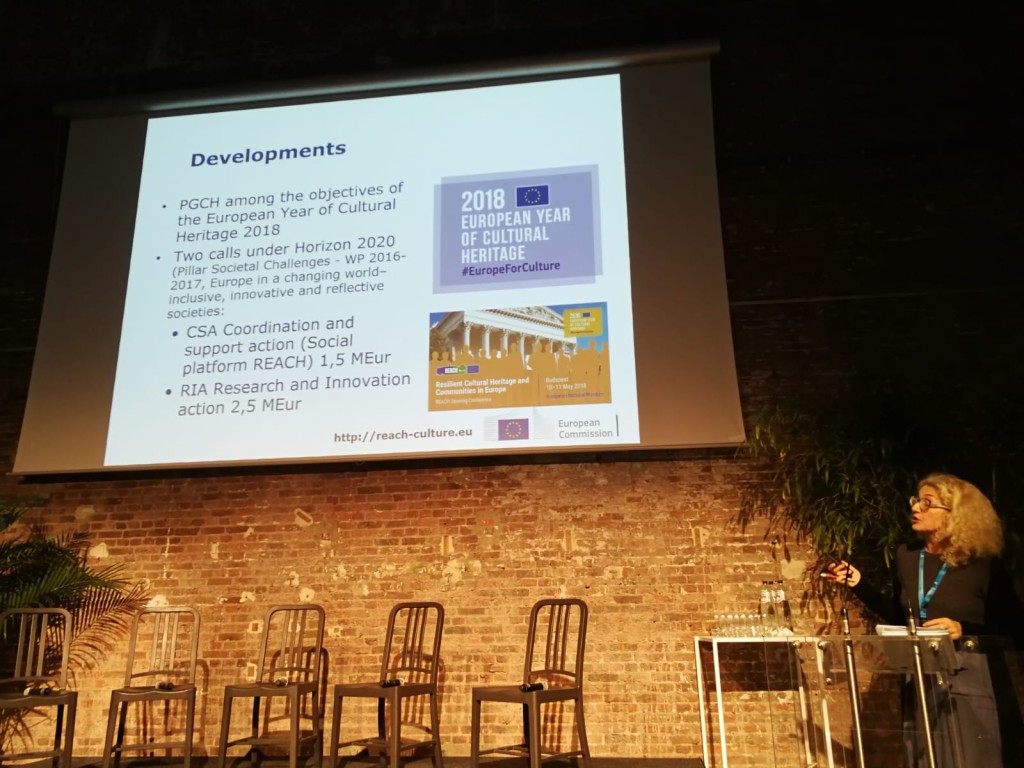

In the last years, the EU has developed various projects in the framework of the 2015-18 Work Plan for Culture and the 2020 Horizon programmes to finance and support Cultural and Creative Industries. However, market fragmentation, insufficient access to finance and uncertainties in salaries conditions continue to undermine the cultural participation and development. In response to the Council’s invitation to do more in the cultural sector, in May 2018 the European Commission adopted a proposal for a New European Agenda for Culture. The New Agenda aims to harness the power of culture and cultural diversity for social cohesion; bolster the common European identity; support jobs and growth in cultural and creative sectors and to strengthen international cultural relations.

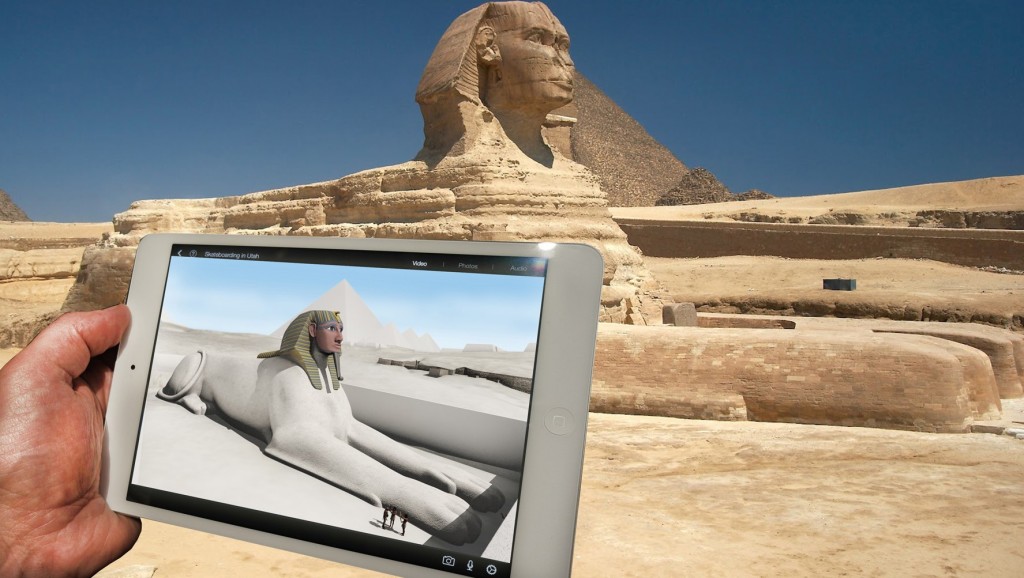

With the evolution of globalisation and digitalisation in modern life, the New European Agenda for Culture embraces the innovation in cultural and creative sectors. This goes with the adaptation of education and training to address the technological and business skills deficit and bring about evolution in the cultural sector. Moreover, it is currently assessed that there is a need for continuous assessment to ensure that the regulatory framework complies with intellectual property rights, consumer protection, online distribution and the establishment and operation of business rules in a digital era.

This timely symposium will evaluate recent initiatives and policy developments in the field of Cultural and Creative Industries from the EU to tackle the emerging challenges to the sector. Participants will exchange views, perspectives and good practices on the possible strategies to tackle these challenges, and will debate the role of CCIs in national politics, education, international communication and social inclusion.

Delegates will:

- Find out about the European initiatives to boost the CCIs sector, such as the New Agenda for Culture of 2018.

- Discuss the CCIs’ role in creating social cohesion and mutual identity within the EU

- Explore ways to adapt to the changes in the creative and cultural sectors in a global and digital era

- Identify ways to get access to the European market for companies operating in the CCIs

- Analyse methods to tackle current challenges in the cultural sectors such as artists’ mobility and contract stability

- Learn from good practices of other members states aimed at supporting the creative and cultural sectors based on cultural exchanges

To view our brochure, including the full event programme, click here.

20% early registration discount off the standard delegate rates (subject to type of organisation and terms and conditions) for bookings received by the 28th December 2018

Website and registration: https://www.publicpolicyexchange.co.uk/events/JB06-PPE2?ss=bk&tg=bp1

Four international workshops on different aspects of participation will be held in the frame of REACH. The first one is being running right now in Berlin.

Four international workshops on different aspects of participation will be held in the frame of REACH. The first one is being running right now in Berlin.

Held in November 2018 in Brussels, the Fair was a wonderful occasion to see how the EU is promoting research & innovation in cultural heritage, fostering creativity and new connections across countries. The event showcased the latest innovations in cultural heritage, discussing why they are developed and how they can benefit both the society and the market. Participants had the opportunity to join the ‘

Held in November 2018 in Brussels, the Fair was a wonderful occasion to see how the EU is promoting research & innovation in cultural heritage, fostering creativity and new connections across countries. The event showcased the latest innovations in cultural heritage, discussing why they are developed and how they can benefit both the society and the market. Participants had the opportunity to join the ‘

The 21 full papers, 47 project papers, and 29 short papers presented at the

The 21 full papers, 47 project papers, and 29 short papers presented at the

Sustainable development of urban areas is a key challenge. The on-going process of urbanisation is only expected to further increase in the future, raising new environmental and human challenges. Currently, 74% of the European Union’s population lives in cities, as they constitute the main poles of human and economic activity (UN, 2018). The large urban areas are hubs for innovation, education and concentrate 53% of the EU GDP, but remain centres of inequalities and greenhouse gas emissions (Eurostat 2016). However, the development of user-friendly and efficient information and communication technology (ICT) leads the way to the creation of sustainable, resilient and responsive cities.

Sustainable development of urban areas is a key challenge. The on-going process of urbanisation is only expected to further increase in the future, raising new environmental and human challenges. Currently, 74% of the European Union’s population lives in cities, as they constitute the main poles of human and economic activity (UN, 2018). The large urban areas are hubs for innovation, education and concentrate 53% of the EU GDP, but remain centres of inequalities and greenhouse gas emissions (Eurostat 2016). However, the development of user-friendly and efficient information and communication technology (ICT) leads the way to the creation of sustainable, resilient and responsive cities.